Regulating artificial intelligence (AI) presents unique and profound challenges. AI systems operate across borders, leveraging global datasets, and are influenced by the rapidly evolving nature of machine learning technologies. Governments attempting to impose regulatory frameworks on AI face the dual difficulty of understanding and anticipating the implications of these complex systems while contending with the internet’s virtual, borderless environment. This lack of physical boundaries makes it nearly impossible to enforce national laws uniformly, as jurisdictions overlap, and foreign entities remain outside the purview of domestic regulators. As a result, while well-intentioned, regulatory attempts often struggle to create meaningful safeguards and can inadvertently hinder innovation, promote compliance theater, or fail to address systemic risks that transcend national borders.

Article Highlights

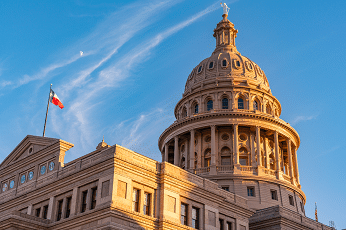

Texas’ Responsible AI Governance Act (TRAIGA)

In the past few weeks, Texas has moved forward House Bill 1709, The Texas Responsible AI Governance Act (TRAIGA), which aims to address algorithmic discrimination and ensure ethical AI deployment. It proposes stringent obligations for developers, distributors, and deployers of AI systems, including the need to mitigate discrimination against protected classes, produce detailed compliance documents, and adhere to “reasonable care” standards to avoid disparate impacts.

TRAIGA applies to a broad spectrum of AI systems, from foundational models to deployment tools, and includes hefty penalties for non-compliance. It establishes the Texas Artificial Intelligence Council, a powerful regulator with extensive rulemaking authority to ensure ethical AI use. While the bill has some positive aspects — such as clarifying key terms and limiting enforcement to state authorities (which though, means it’s toothless globally) — it still retains significant flaws, including the potential for overly expansive liability and cumbersome compliance requirements that disproportionately affect smaller entities and innovators (handicapping startups).

Dean W. Ball with the Mercatus Center at George Mason University explains, “Say a business covered by TRAIGA (which is to say, any business with any operations in Texas not deemed a small business by the federal Small Business Administration) wants to hire a new employee. What might be some uses of AI covered by TRAIGA, even under this new definition of “substantial factor”?” The possibilities, “require the deployer—that is, any company in the economy not considered a small business—to write these algorithmic impact assessments.”

Local Legislation Like TRAIGA Will Fail to Meaningfully Tackle AI Concerns

Despite its ambitious scope, TRAIGA is fundamentally flawed in addressing the real challenges posed by AI. Its broad application and reliance on “reasonable care” liability incentivize organizations to prioritize risk aversion over meaningful innovation. This approach leads to excessive documentation and procedural overhead, creating a bureaucratic burden that hinders smaller players while favoring well-resourced incumbents. The bill’s reliance on disparate impact theories of discrimination, where liability can arise without intent to harm, further complicates compliance and encourages overly conservative deployment of AI systems.

The legislation also fails to account for the internet’s borderless nature and the global diffusion of AI technologies. Even with TRAIGA in place, companies and bad actors operating outside Texas — or the United States — remain unaffected. This undermines the bill’s efficacy, as many AI-related harms, such as misuse or ethical violations, can originate from entities beyond the reach of state laws. In much the same manner, the past decade’s incessant efforts to regulate social media have accomplished little more than wasting legislators’ time and taxpayer resources.

Furthermore, the creation of a powerful regulatory body like the Texas Artificial Intelligence Council introduces the risk of regulatory capture, where large tech firms can shape policies to their advantage, stifling competition and innovation. The council’s broad mandate and enforcement powers may inadvertently encourage censorship and limit the scope of permissible AI applications, fostering a climate of fear rather than innovation.

The False Sense of Security and Unsafe Outcomes

By enacting TRAIGA, Texas risks instilling a false sense of security among its citizens and businesses, suggesting that comprehensive regulations will protect them from AI’s potential harms. The intention behind ethical deployment and mitigating discrimination are very good intentions, don’t misunderstand me; the challenge is inherent in the efforts of local governments regulating virtual, globally accessible infrastructure in which jurisdiction ends at borders. The bill’s expansive and poorly targeted provisions are more likely to result in performative compliance than substantive safety measures. This illusion of control can be particularly dangerous, as it diverts attention from the pressing need for globally coordinated, adaptive, and technology-informed AI governance.

In essence, TRAIGA exemplifies the pitfalls of regulatory overreach in an interconnected digital world. It fails to grapple with the complexity of AI’s challenges while creating barriers to innovation, leaving both the state and its citizens vulnerable to the very risks the legislation aims to mitigate. Instead of leading, TRAIGA reflects an outdated approach that prioritizes control over collaboration, ultimately falling short of addressing AI’s nuanced and global implications.

Interesting!